1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

| import threading

import time

from bs4 import BeautifulSoup

import codecs

import requests

start_time = time.time()

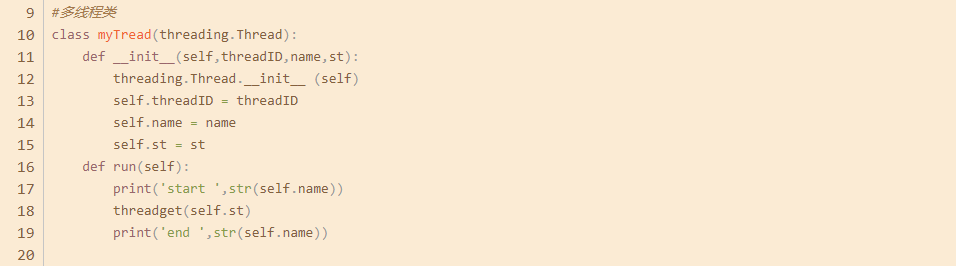

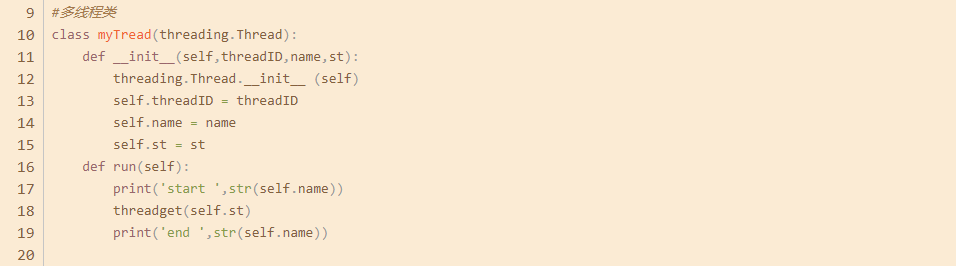

class myTread(threading.Thread):

def __init__(self, threadID, name, st):

threading.Thread.__init__(self)

self.threadID = threadID

self.name = name

self.st = st

def run(self):

print("stat ", str(self.name))

threadget(self.st)

print("end ", str(self.name))

txtcontent = {}

server_url = 'https://www.idejian.com'

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36'

}

def gethtml(url):

html = requests.get(url=url, headers=headers).content.decode('UTF-8', 'ignore')

return html

txt_name = []

chaptername = []

chapteraddress = []

def getchapter(html):

soup = BeautifulSoup(html, 'lxml')

try:

name = soup.find('div', class_="detail_bkname").find('a')

txt_name.append(name.string)

alist = soup.find('ul', class_="catelog_list").find_all('a')

for list in alist:

chaptername.append(list.string)

href = 'https://www.idejian.com' + list['href']

chapteraddress.append(href)

except:

print('未找到章节')

return False

def getdetail(html):

soup = BeautifulSoup(html, 'lxml')

try:

content = ''

pstring = soup.find_all('div', class_="h5_mainbody")

if len(pstring) > 1:

pstrings = pstring[1].find_all('p')

else:

pstrings = pstring[0].find_all('p')

for p in pstrings:

content += p.string

return content

except:

print("出错")

return "出错"

def threadget(st):

max = len(chaptername)

while st < max:

url = str(chapteraddress[st])

html = gethtml(url)

content = getdetail(html)

txtcontent[st] = content

print('下载完毕' + chaptername[st])

st += thread_count

def getname(name):

url = 'https://www.idejian.com/search?keyword=' + name

html = gethtml(url)

soup = BeautifulSoup(html, 'lxml')

try:

namelist = soup.find('ul', class_="rank_ullist").find('div', class_="rank_bkname").find('a')

print('开始下载小说:',namelist.string)

return namelist['href']

except:

print('未找到该小说')

return False

txt_id = str(input('请输入你想要下载的小说名字\n'))

book_url = getname(txt_id)

if book_url:

url = server_url + book_url

html = gethtml(url)

getchapter(html)

thread_list = []

thread_count = int(input("请输入需要开的线程数\n"))

for id in range(thread_count):

thread1 = myTread(id, str(id), id)

thread_list.append(thread1)

for t in thread_list:

t.setDaemon(False)

t.start()

for t in thread_list:

t.join()

print('\n子线程运行完毕')

txtcontent1 = sorted(txtcontent)

file = codecs.open('./小说/' + txt_name[0] + '.txt', 'w', encoding='utf-8')

chaptercount = len(chaptername)

for ch in range(chaptercount):

title = str(chaptername[ch]) + '\n'

content = str(txtcontent[txtcontent1[ch]])

file.write(title+content)

file.close()

end_time = time.time()

print("下载完毕,总耗时", int(end_time-start_time), "秒")

|